In my thesis, I state:

Computation provides us with unprecedented tools to implement such a vision. Perhaps the most fundamental agreement with my viewpoint comes from an unusual source: Encyclopedia Pictura are a trio of motion-graphic artists who have made extraordinary music videos for artists such as Bjork and clients like Spore.

Near the bottom of their website menu is a discrete link to a page devoted to visual language: a set of drawings and eventually doodles which outline their vision for an augmented reality application which utilizes morphological text that is relationally appropriate to the sound of the voice of the speakers.

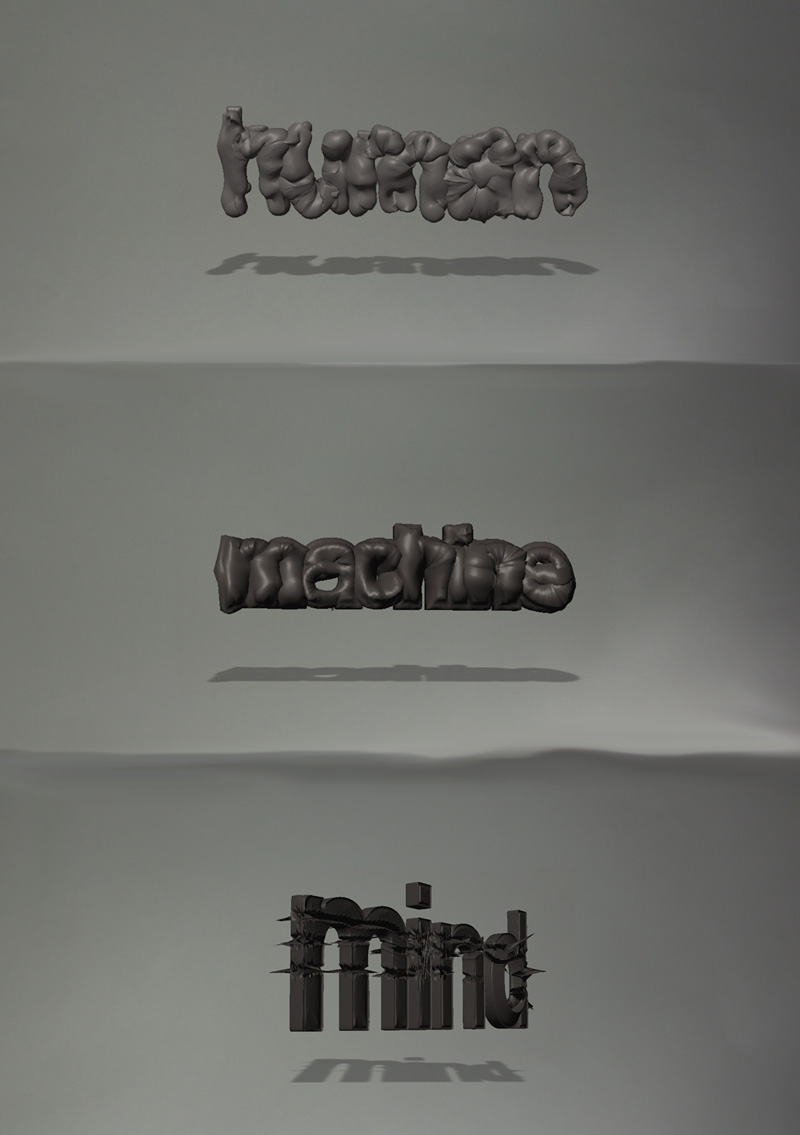

In other words they propose precisely what I have advocated in my thesis and worked towards with works like Human–Mind–Machine. Except they have actually gone farther, providing one-to-one relationships between sounds and candidate shapes.

They also reference the Bouba-Kiki effect, and rely upon the 98% consensus for best fit between shapes and sounds that was originally discovered in 1927 by Wolfgang Koehler. In their version they outline a research process for making computationally-enhanced visual language a realistic goal. They propose a study where volunteers choose which forms feel most appropriate to phonetic features of speech. They also propose recording “how changes in pitch and volume alter these relationships”. Essentially, the prosody of synaesthsia.

Using the statistical data derived from their proposed study they would then “sculpt a library of selected forms”, animate these forms, implement logical behaviours, and interaction characteristics and use them in an augmented reality application. So in essence they are advocating a process that moves from statistical analysis of people’s archetypal predispositions toward an appropriate match between the sound of a word and its visual representation.

And, to repeat a bit, these representations would not just simply be static letterforms but mobile mutating animated morphological letterforms. Letter creatures would erupt from our mouths. Words would be radical vibratory presences.